Agentic AI Has Arrived

Small ways I'm exploring a major AI capability

Generative AI makes stuff. Agentic AI does stuff.

This is an oversimplification, but I think it captures an important update we need to make to our understanding of AI and its impact on school.

The example I give when I present on AI is straightforward: a common use case of generative AI is to have it write travel itineraries (“Create a one-week plan for a trip to X. I’m traveling with small children and my favorite things to do are hike and eat local food.”) In addition to generating that itinerary, agentic AI can book your flight, contact your hotel to request an upgrade, and make restaurant reservations (“Propose a plan for booking this itinerary. Once I approve, make all needed reservations and purchases.”) If you want an overview of agentic AI, here is a good introductory summary.

Agentic capabilities have been creeping into chatbots for a while now: when a chatbot searches the internet and curates links for you, that’s agentic behavior. “Deep Research” features like those in ChatGPT, Perplexity, and Gemini do internet searches, review curated links, and compose research reports, all based on a single prompt.

The implications of agentic AI in education are significant. Online education professionals have been sounding the alarm for more than a year. I worked in online education for ten years myself, and we depended on discussion forum submissions, online quizzes, and other online activities not only to assess learning, but also to confirm that our students were present, engaged, and learning in the course.

Agentic AI can complete these activities quickly and with basic competence, even at this early stage. Demos from David Wiley, Anna Mills, and Phillipa Hardman capture what this might look like for any task designed to be completed online. And, as Marc Watkins makes passionately clear, companies like Perplexity are actively marketing agentic tools to students as “study tools” in direct opposition to the mission of education.

How might we learn more about agentic AI so that we can respond to it? I still think the best way to make sense of AI is to use it, so below you can get a glimpse into some of my early experiments.

How I Tried to Use Agentic AI

I focused on tasks that educators might do in their day-to-day work. I intentionally made my prompts brief and vague in order to assess how well it makes its own decisions.

Gemini App Integration

(Available with Gemini Pro or with a Gemini Workspace account.)

Activating App Integration in your Gemini settings gives Gemini access to your Gmail, Drive, and Calendar. If you use Google Workspace at your school or place of work, you should confirm that you have permission to activate App Integration (here are all the privacy details).

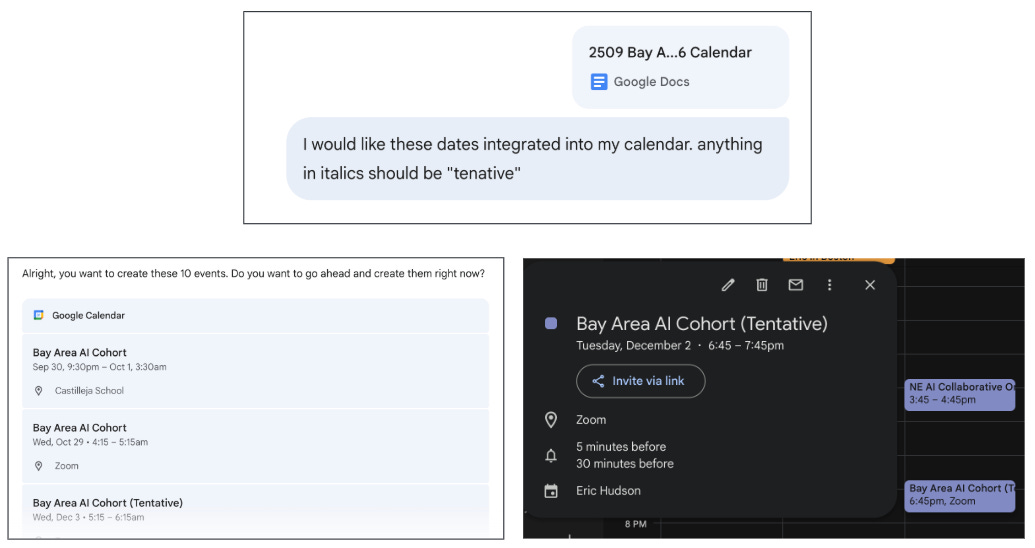

I shared with Gemini a Google Doc with a schedule of meetings for the next nine months (both online and in-person) and asked it to update my calendar according to that table. Gemini accurately assembled a proposed list of calendar events, than after I approved it, created all the events in the correct time zone and with the correct descriptions in my Google calendar.

I had Gemini scan my email and calendar to find any discrepancies to meetings I’ve agreed to in email vs. meetings actually on my calendar. It named three discrepancies, two of which were actually issues that needed to be resolved. I asked it to resolve discrepancies by creating calendar events, and it did so accurately.

I asked Gemini to go into my Drive, find every proposal I have sent to a prospective client in the last quarter, and compile a spreadsheet with proposed budgets and summaries of the scopes of work. It did this surprisingly well. I do use consistent file naming conventions on my documents, and I have a hunch those conventions enhanced Gemini’s ability to do this work well.

Gemini can’t or won’t (yet) invite people to meetings, and can’t or won’t add Zoom links to meetings using my Zoom integration.

Perplexity Comet Browser

(Waiting list for free users, but available to Perplexity Pro subscribers.)

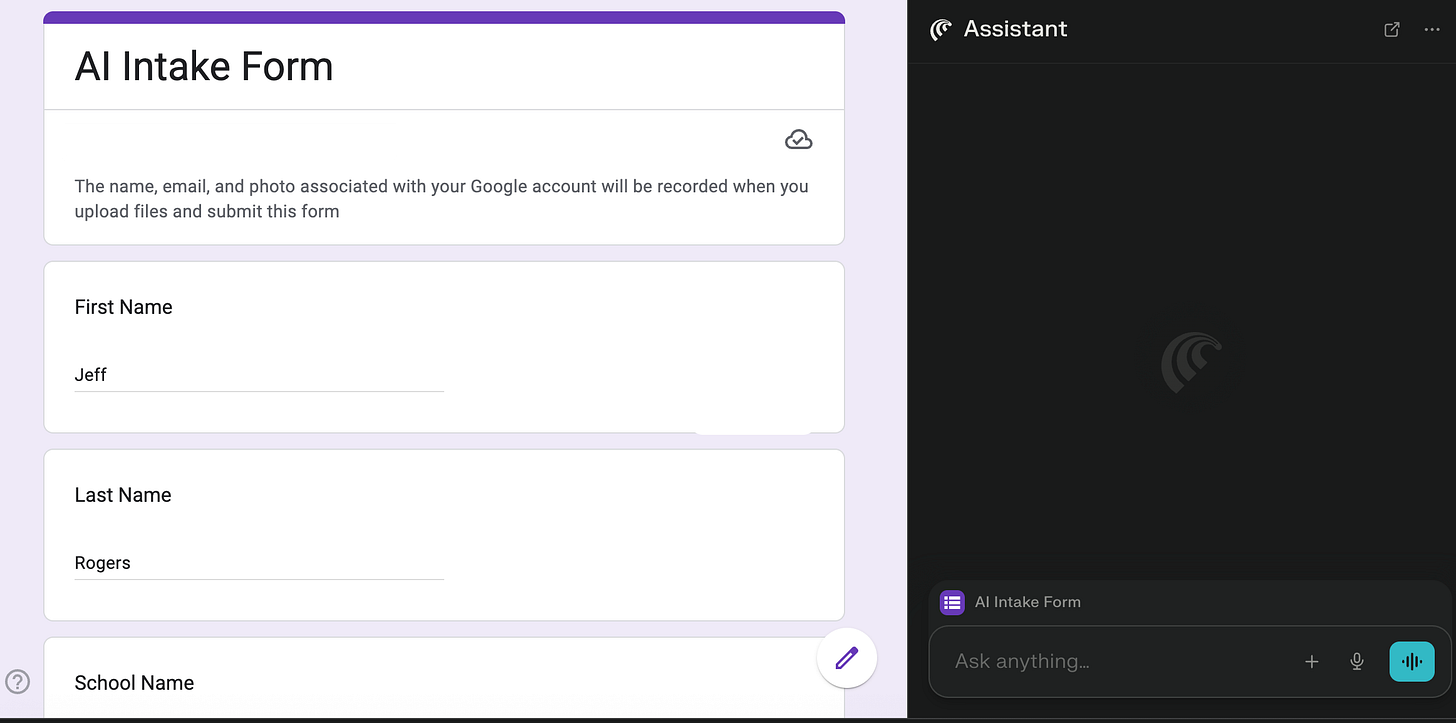

Comet successfully completed a Google Form that I use as a template for events I facilitate. I opened the form in Comet and gave it this prompt: Fill out this form for me. My name is Jeff Rogers and I work at X School as an English teacher. You can decide how to fill out the rest. Just make it seem like I’m curious and enthusiastic. Here is the response it generated. In a later experiment, it was able to submit a file for the final question by searching Drive for a name I gave it and adding it to the form.

ChatGPT Agent Mode

(Available to ChatGPT+ subscribers.)

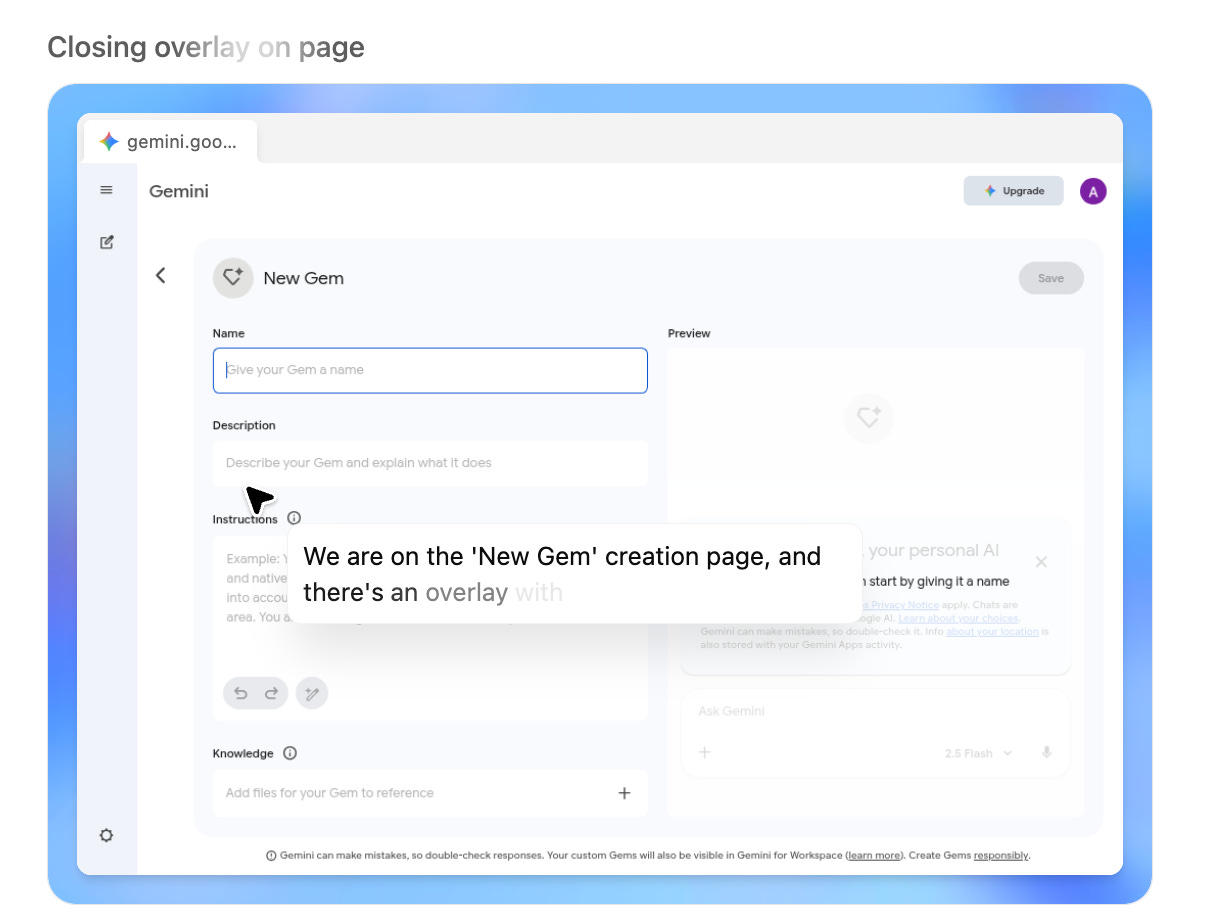

I asked it to create a Gem in Gemini that could support school leaders in designing human-centered feedback. ChatGPT asked me to log in to Gemini myself (it wouldn’t do it for me using credentials I gave it), and then it successfully opened the “Create a Gem” feature, added a name and instructions, and saved the Gem.

After I prompted it to do so, it tested the Gem and gave me its thoughts on how effective it was.

Here’s the whole interaction. It captures not just my prompts, but recordings of how Agent Mode navigates websites, which are useful to watch (ChatGPT automatically leaves out the parts where I take control and login so it can use Gemini). Here’s the Gem it created.

ChatGPT Atlas Browser

In the middle of my experiments, OpenAI released Atlas, an agentic browser powered by ChatGPT that is similar to Comet.

I opened Canvas LMS in Atlas and gave the AI assistant this prompt: “I would like a short weeklong course on AI in education. I need curated resources, a couple of discussion forums, and a final writing assignment.”

Atlas asked me to log in to Canvas myself “for security reasons” and then built the course. I had to prompt it to finish two final tasks: embed hyperlinks on the resources page and remove duplicates it mistakenly created of the final assignment. It completed both tasks accurately.

You can watch a portion of how Atlas works below (at 4x speed). In real time, the process took about 25 minutes.

I Have A Lot of Questions

This is probably the most stunned I’ve been by AI tools since the first time I used ChatGPT three years ago. With minimal prompting, agentic AI completed these tasks quickly, accurately, and mostly independently. As I experimented, I jotted down questions, big and small.

What do we need to hold on to in education? What do we need to let go of? In his excellent essay, “The Age of De-Skilling,” Kwame Anthony Appiah writes, “The old distinction between information and skill, between ‘knowing that’ and ‘knowing how,’ has grown blurry in the era of large language models.” Schools now live in this blurry zone and must adapt. In his (also excellent) piece on agentic AI, “On Working With Wizards,” Ethan Mollick suggests two new skills agentic AI makes essential, and he also clearly articulates the challenge before us:

“First, learn when to summon the wizard versus when to work with AI as a co-intelligence or to not use AI at all… Second, we need to become connoisseurs of output rather than process. We need to curate and select among the outputs the AI provides, but more than that, we need to work with AI enough to develop instincts for when it succeeds and when it fails… How do you train someone to verify work in fields they haven’t mastered, when the AI itself prevents them from developing mastery? Figuring out how to address this gap is increasingly urgent.”

Does “multitasking” now include AI agents? One of the most important differences between using agentic AI and using generative AI is that I could prompt agentic AI to do something, then go do something else for 10-15 minutes, then check back to see if the task was complete. If we include what AI agents accomplished, then in about three hours I did all the experiments above, wrote some emails, finished a slidedeck for a workshop, and started pulling together the “Links!” section below. Does AI work “count” towards my multitasking? How do AI agents change our expectations about how a person works and what we expect them to accomplish in a certain amount of time?

Do online forms and online trainings still matter? I’m asked to fill out two or three online forms a week for a variety of audiences. It’s possible to ask AI agents to complete those forms on my behalf right now, and in the future it will do so with increasing accuracy and nuance as they learn about me and my preferences. Given that new reality, how much can we trust and rely upon online surveys as a form of insight into what people think?

Does this mean online task management relief is coming very soon? If you are a person who has dozens of tabs open, or has 10,000+ unread emails, or learned the term “file naming convention” by reading this post, or in other ways struggles with the digital minutiae that define so many tasks in our daily lives, AI agents are already competent enough to manage some of that work. Learning a new tech platform is could soon consist of learning how to get your AI assistant to learn and manage that new tech platform for you.

What does it mean to be “present” online? So much of online learning (or being in any social space online) is about creating artifacts that communicate your presence: that you are paying attention, that you are listening and responding, that you are working through a process. In an era of AI agents, what are the online artifacts we can create that immediately identify us as humans, especially humans who are learning?

How will AI agents contribute to the digital divide? For all the discourse around AI democratizing tutoring and personalized learning, I’m seeing plenty of evidence of generative AI exacerbating the digital divide, mostly in the form of well-resourced families and schools providing students with top-of-the-line AI tools (like premium subscriptions) while less-resourced people and organizations rely on limited free tools. What happens when an “AI Executive Assistant” is available for $200 a month while an “AI Intern” is available for $20 a month while no agentic AI is available for free?

What’s next? Agentic AI is just a step in AI development, not an end goal. When you use these tools, think about not just what they do, but also what they portend. What happens, for example, when these agents become embodied, and can perform physical tasks?

The Implications Are Important

On this episode of Kara Swisher’s “On” podcast, economist Betsy Stevenson compared the advent of AI to the advent of heavy machinery and other industrial technologies more than a century ago. When innovations like the forklift emerged, human physical strength (being able to lift heavy things) no longer had the competitive advantage that it used to. AI is making raw intelligence (as measured by, say, an IQ score) less of a competitive advantage. Cognitive forklifts have entered the market.

Schools are places that we have longed relied upon to build cognitive strength in students. What should the purpose and design of that work be in a world where agentic AI has arrived?

When I do workshops on AI’s impact on assessments, there’s a segment where I ask teachers to distinguish between “learning goals” and “tasks.” This is inspired by the book Leaders of Their Own Learning, which emphasizes that goals should express what students will learn as a result of completing a task, not simply articulating what the task is. In a world where agentic AI can complete many educational tasks we have long relied on as proxies for learning, we have big decisions to make. I hope we are driven by what students need to learn over what we want them to do.

Upcoming Ways to Connect With Me

Speaking, Facilitation, and Consultation

If you want to learn more about my work with schools and nonprofits, take a look at my website and reach out for a conversation. I’d love to hear about what you’re working on.

In-Person Events

February 25, 2026. Kawai Lai and I will be facilitating a three-hour workshop, “Human First, AI Ready” at the NAIS annual conference in Seattle, WA, USA. This workshop is designed for school leaders who are navigating the complexities of AI integration at school, including defining ethical behavior, navigating diverse perspectives, and supporting a strategic and sustainable approach.

June 16-18, 2026. I’ll be facilitating a three-day AI institute called “Learning and Leading in the Age of AI.” This intensive residential program is designed for school teams to have time and space to design classroom-based and schoolwide AI applications for the next school year. Hosted in partnership with the California Teacher Development Collaborative (CATDC) at the Midland School in Los Olivos, CA, USA.

Online Workshops

January 7 to April 1, 2026. I’m facilitating “Leading in an AI World,” a four-part online series for school leaders navigating the complexities of AI integration at school. We’ll review the AI landscape, look at how to shape mission-aligned position and policies, and explore a variety of ways to engage colleagues, students, and families in meaningful AI learning experiences. Offered in partnership with the Northwest Association of Independent Schools.

January 22, 2026. I’ll be doing a second run of “AI and the Teaching of Writing: Design Sprint” in partnership with CATDC. This workshop, designed for teachers of writing in all disciplines, offers some important considerations, practical examples, and hands-on exercises to consider how we should adapt the teaching, practice, and assessment of writing in an AI age.

Links!

I had this great plan to write a post that explored Arvind Narayanan and Sayash Kapoor’s thought-provoking paper “AI as Normal Technology” in the context of education, but Leon Furze beat me to it with “GenAI is Normal EdTech.” Both pieces are helpful in contextualizing generative AI in the history of technology as well as in planning for what our AI future will look like.

On the topic of our AI future, read Rob Nelson’s “What Comes After the Chatbot?”

Helpful new resources on AI’s environmental impact: The New Yorker just put out a long piece on data centers, The Washington Post did a podcast episode on comparing the environmental impact of chatbot use to other digital tools, and Jon Ippolito put together an app that compares the energy use of different digital activities.

Daisy Christodolou and her team have been researching how effective AI is at assessing writing, and their latest report found it to be “uncannily good.”

The 404’s series on lawyers who are getting fined for including AI-generated errors and fabrications in their briefs is so fascinating to me. How different are their reasons from the reasons students give for cheating?

I love Natalia Cote-Muñoz’s ongoing experiments with using AI as an assistant in her own writing. Lots of ideas here that you can try yourself.

Andrew Cantarutti has a helpful overview of two recent studies on the negative impact of screentime on student learning.

Thank you for sharing your Agentic AI explorations, Eric. I'm a high school English teacher that's been grappling with how to adjust to AI for a couple of years now. Like you say in this piece, the more I learn and explore, the more I'm left with even more questions.

Here's a few I'm thinking about now after reading your post:

What ethical and privacy concerns should we have about giving Agentic AI access to our drives, email, and calendar? What's the incentive for these companies to create these tools? Even money generated from premium level subscriptions doesn't come close to covering costs, so these tools at this point are effectively loss leaders, right? At some point if not already, our personal data or emerging dependency on these tools will be monetized. What will that look like in education or our personal lives?

AI slop and humanity

In a world where anyone can effortlessly create a convincing "Jeff Rogers," how do we know what or who is real and what isn't? And as you note, how can we identify real humans or real learning when fakes are so easy to generate?

Thanks again for your post and for sharing your thoughts and work on AI and education.

Facinating and thought-provoking as always, Eric. Thank you for sharing your thoughts