Back to School with AI, Part 5: Making Decisions about AI

AI is not a problem to be solved. It's a polarity to be managed.

How should we make decisions about AI?

When I talk with schools and learning organizations about what to do (or not do) about AI, the complexity and scope of the issue can seem overwhelming. AI is evolving so rapidly that it’s hard to feel like we are being proactive when we seem to be constantly reacting to new features or new use cases we hadn’t imagined before.

These conversations have reminded me that it’s tempting to equate making decisions with solving problems: we make a choice, and we move on to the next problem to solve. But no single decision is going to “solve” AI for us. Fortunately, there are other ways to think about managing complexity.

A Polarity Management Approach to AI

Polarity management is a term first introduced in Barry Johnson’s book of the same name. Learning about polarity management reframed how I think about decision-making. When we are solving a problem, we are making a clear choice (When should we schedule that special assembly? Which learning management system should we use?) and achieving resolution. Problems require an either/or mindset.

When we are managing a polarity, we are seeking balance between two opposing ideas. Polarities require a both/and mindset that recognizes that many issues organizations face are complex and not easily solved. These issues require holding two ideas together at the same time and managing the ongoing process of keeping them in balance. This is why, when describing the two poles that make up a polarity, you should use the word “and” instead of “or” or “versus.” This concept is, of course, much older than polarity management, embodied ideas like Yin and Yang from Chinese philosophy.

Here is a good three-minute overview from Jennifer Garvey Berger:

Schools are complex, human-driven organizations, environments where polarities abound. Here are a few I’ve worked on with teams of educators and administrators:

Teacher autonomy and Consistent student experience

Knowledge and Skills

Do more and Slow down

Changing traditions and Keeping traditions

Communicating too little and Communicating too much

In my conversations about AI, I find myself thinking about one polarity in particular:

Doing what we know and Exploring the unknown

This isn’t a polarity related to AI only. It’s a polarity organizations have faced any time innovations or disruptions occur in their fields. Because AI is so powerful and is evolving so rapidly, though, my sense is that schools are feeling this tension acutely right now.

As I’ve tried to write about in this series, AI can support what we know to be true about learning and it presents opportunities to rethink and transform traditional assessment models. It emphasizes the need for clear academic integrity policies and asks us to reconsider what academic integrity looks like in an AI age. It challenges our commitment to diversity, equity, and inclusion through its problematic design and it has also been shown to support more equitable outcomes and improve accessibility. It reminds us that it’s important to do what we know and it empowers us explore the unknown.

How do we make sense of this polarity as a community? How might teams think through this complexity together? What decisions do we need to make to keep this polarity in balance rather than have our school lurch from one side to another?

Polarity Mapping as Visible Thinking

Polarity mapping is a technique for making sense of polarities and equipping ourselves to make decisions that keep a polarity in balance. It’s one of my favorite activities to facilitate with teams because it offers a clear and simple framework for collaborative thinking about complexity. It asks us to make our thinking visible so that others can react to and build on our ideas. It reveals individual preferences for or biases towards certain poles and helps teams navigate those preferences and biases openly. By the end of a polarity mapping session, teams have a visual document that helps them not just understand the polarity to be managed, but to act in intentional ways to keep that polarity balanced.

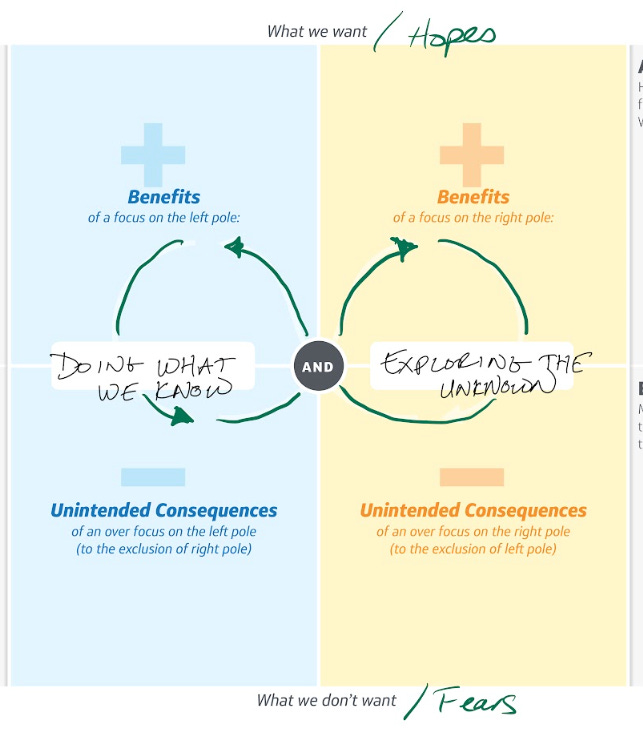

Polarity mapping begins with a simple graphic organizer (I’m using Stephen Anderson’s version here).

Mapping begins in the center of the document and then works outward. Start by naming your polarity in the middle and then identifying both the “benefits” and “unintended consequences” of each pole. Anderson thinks of benefits and unintended consequences as “What we want” and “What we don’t want.” I also think of them as our “hopes” and “fears.”

Take special note of the infinity loop, the arrows moving in a cycle in the middle. This is the most important part of the map: it’s a visual reminder that keeping a polarity in balance is an ongoing process. In polarity management, we make frequent and intentional decisions to keep the loop mostly in the benefits side. This is to keep us from lurching from one pole to another, reacting to negative consequences more than we are proactively seeking benefits.

Once you’ve named the polarity, you can start working together to imagine what the benefits and unintended consequences are for each pole. Do this with Post-Its so you can move ideas around, edit them, or build on them.

These are some of my hopes and fears. If I were facilitating this with a team, new and different ideas would emerge. Naming benefits and unintended consequences clarifies what we are looking for when we are managing a polarity. For example, if we start to see new voices in our community driving the AI conversation (top right), then we know we are benefiting from exploring the unknown. If confusion starts to proliferate about meaningful and valuable use of AI (bottom right), then we are leaning too hard into the unknown and starting to feel some negative consequences.

Once you’re clear about the benefits and unintended consequences, you can move to the outer edges of the map (marked in gray in Anderson’s versions), identifying action items and early warning signs for both poles. These are, as Berger mentions in the above video, “weak signals.” Monitoring these signals is how we balance doing what we know and exploring the unknown, aiming to reap the benefits of both while avoiding unintended consequences.

This is why I think polarity management is such a good skill to have for navigating AI this school year and beyond: the complexity is only going to increase, and we will continue to have to make decisions as AI evolves. We should be seeking balance, not resolution.

How are you approaching decisions about AI? Does polarity management resonate?

Upcoming Workshops

Join me for some live learning about AI.

I’m so happy to continue my partnership with the California Teacher Development Collaborative (CATDC) to offer online workshops on AI. On October 24, I’ll be facilitating“Talking with Students about AI”and on November 7, I’ll be facilitating“AI, Assessment, and the Question of Rigor.”These are open to all, whether or not you live/work in California.I’ll be presenting at theInnovative Learning Conferenceat the Nueva School in San Mateo, CA, USA, October 26-27. My session is titled, “Redesigning Assessments with AI and Agency in Mind.”

Links!

Engaging, thoughtful essay from Chris Dede about how AI is changing and will change how we think about academic integrity.

Emily Pitts Donahoe and two of her students co-wrote this great piece on why AI should continue the movement to rethink grading practices.

A Colorado State University repository of prompts and assignments for using AI with students. The long introduction is worth reading, but you can scroll to the bottom to find links to examples.

I like Tricia Friedman’s playbook for guided conversations with students about generative AI and academic integrity. It’s hands-on and discussion-based.

Thanks for reading! If you enjoyed this post, I hope you’ll share it with others. It’s free to subscribe to Learning on Purpose. If you have feedback or just want to connect, you can always reach me at eric@erichudson.co.