Using AI for Search and Research

We talk a lot about what AI can help us do. What can it help us find?

I spent an afternoon earlier this week trying a variety of AI tools for search and research. As I’ve written about before, I’ve been educating myself about the role of trust in both student and professional learning. I hadn’t yet used AI for search and research in any intentional way, so I picked a few tools that have been recommended to me and used the same initial prompt in all of them:

“How do I redesign assessments to build trust with students?”

This post is just a summary of my initial exploration, not a list of recommendations. I don’t (and didn’t try to) cover all available search and research AI tools, and I am a relative novice in most of the ones I did try. I used all of them at the free level, though most of them (except for Bing and Google) offer a variety of premium-level services that they claim improve the experience.

I did a general search in Perplexity, Bing, and Google.

Perplexity is an AI-powered search engine. Its free level is powered by GPT 3.5 (its pro model offers access to more sophisticated models as well as other features). Unlike ChatGPT, which uses a dataset that’s more than a year old, Perplexity is connected to the internet and can search it as part of fielding your query. It shares a response in the same natural language format as any chatbot, but it also curates a list of resources for you and annotates its responses with links to those resources.

When I inputted my prompt, Perplexity curated a number of resources I probably would have found in a couple of minutes of scrolling through a Google search. More useful to me, though, was how its natural language response was annotated. If I liked one of its ideas, I could click on a link that led me to the resource its response was based on.

Perplexity also has a number of ways to tailor searches. “Focus” allows you to limit searches to academic papers, YouTube videos, or Reddit threads, among other filters. “Collections” allow you to organize and share searches on certain topics (could be huge for group projects). “Copilot” brings an AI assistant into your search.

A pleasant surprise when I tried Copilot: after I submitted a prompt, it asked me what subject and age level I taught. After I told it I taught middle school math, its response included ideas and resources related to middle school math.

As the dialogue continued, however, it rarely chimed back in. I would have loved this “search assistant” to have a stronger presence as I worked through my ideas.

The experiences of using Bing and Google’s beta AI feature were very similar: they provide an annotated natural language response with a playlist of resources. Neither has as many customization options as Perplexity. Using Bing does give you free access to GPT-4, OpenAI’s more sophisticated model, so it has all of ChatGPT-4’s multimodal capabilities: it can analyze and create images, interact in audio, etc. One thing I liked about Google’s new AI feature: you interact with it at the top of a search, so you can always scroll past it and engage in more traditional browsing of results below.

I can imagine using these tools for searches on complex topics like assessment or for help on tasks like fixing my broken blender. For quick, fact-based searches (“local blender repair”), I’ll probably stick with traditional search. My main takeaway: given how accessible AI-powered search is, I don’t see why anyone would use ChatGPT to find out about something. It would be like using Wikipedia without being able to access the footnotes.

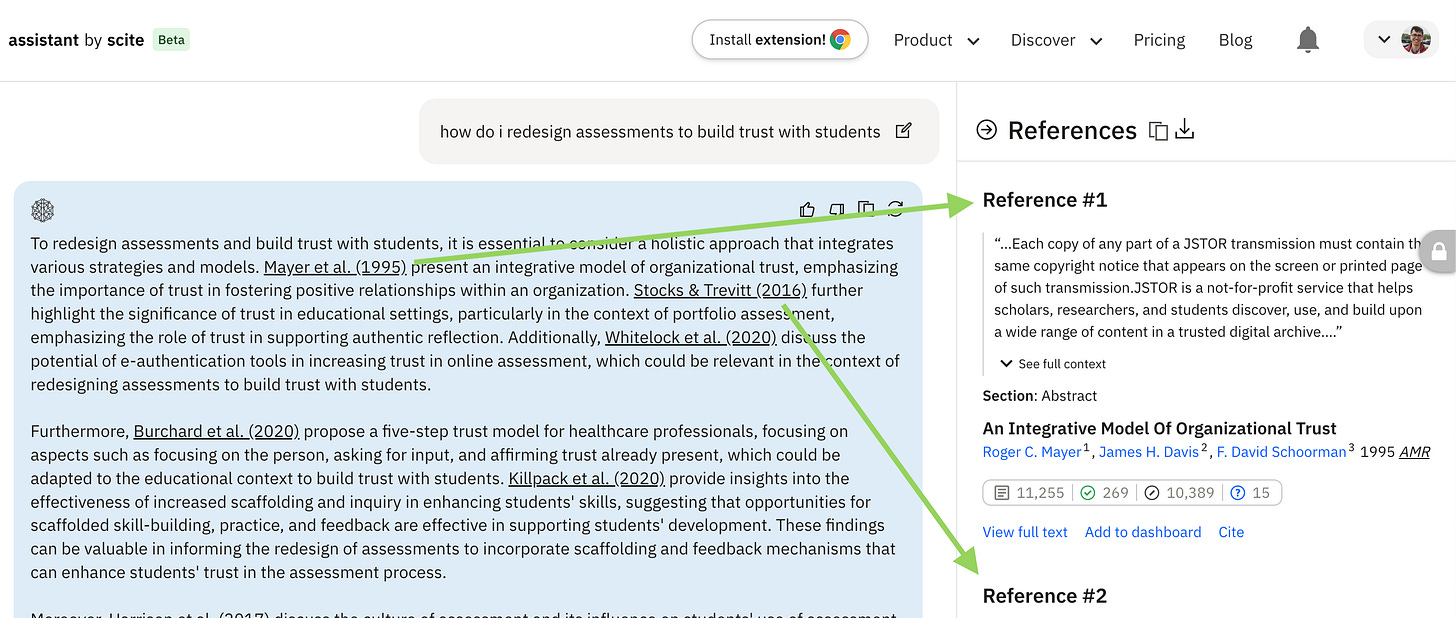

I researched academic papers in Consensus, Elicit, and Scite.

For academic research into my prompt, I explored Consensus, Elicit, and Scite’s Assistant, which access databases like Semantic Scholar and the National Institutes of Health to curate research playlists. They use generative AI to create annotated research summaries linked to those playlists.

Some other helpful features that these research tools offer that the general search tools don’t:

The ability to extract specific information from papers. For example, Elicit lets you add columns to a table that pull specific elements from each suggested paper so you can compare them.

The ability not only to summarize lists of sources, but also to dive deep into individual papers. For example, Scite generates reports that organize and link to places where each paper is cited and to other papers it cites. Consensus uses generative AI to show you the method, sample size, and key findings for each paper.

The ability to export search results into a csv file with titles, authors, abstracts, doi links, and more.

Generation of citations in a variety of formats.

I really appreciated the AI-generated summaries in these tools. Again, it reminded me of using Wikipedia: the summaries offered me an overview in accessible language and many pathways for deeper exploration. The annotations make that deeper exploration easy. When I begin a research project, I often feel overwhelmed, and these tools went a long way in helping me feel more in control of the process.

I used ChatPDF for dynamic reading of academic papers.

I came across two studies that I wanted to explore more deeply, memorably titled “Assessing Trust in a Classroom Environment Applying Social Network Analysis” (discovered in Perplexity) and “Increased Scaffolding and Inquiry in an Introductory Biology Lab Enhance Experimental Design Skills and Sense of Scientific Ability” (discovered in Scite).

Many of the widely available chatbots can read and process files in multiple formats (something I often use them for). For absorbing long, dense documents like academic papers, I’ve enjoyed using ChatPDF. When you upload a pdf, it visualizes the paper on the left and then engages you in a chatbot dialogue about the document on the right. It also annotates its responses with linked references to the text, so you can toggle between the chatbot response and the primary text.

I used to have to slog through documents like this and hope I would find elements relevant to my research goals. In contrast, the reading experience of ChatPDF is dynamic for me: moving from the AI-generated summary into the primary text and then back into the chat to ask follow-up questions is engaging and effective. Rather than exhaust myself just navigating the document, my energy was spent on reading parts of the text closely and using the chatbot to push my search further. This is the way we should conceive of rigor in the age of AI.

Search and research are pathways to authentic learning with AI.

As I did this research on trust and assessment, I found myself learning some of the elements I need to trust AI for search and research. The tools I used here had one thing in common: they offered more transparency than ChatGPT or similar chatbots. Transparency, in this case, takes the form of external links to cited resources, internal links to relevant parts of the source text, and easy access to the source material and its references.

As with the Dewey Decimal System and electronic databases and Google and Wikipedia, AI is changing how we organize knowledge, find information, and learn from research. It is another paradigm shift in our access to knowledge and our ability to partner with technology to do sophisticated work on topics that we care about.

Search and research might be a case study for collaborating with students on AI. In order to use AI to conduct high-quality search and research, we need instruction, practice, feedback, and ongoing support. We need to understand how to use generative AI across different types of tools and platforms. We need to know that while AI can’t (yet) be a final authority, it can be a bridge and an assistant, connecting us to vast libraries of knowledge and helping us learn from them.

Upcoming Ways to Connect with Me

Speaking, Facilitation, and Consultation. I’m currently planning for spring and summer here in the northern hemisphere (April to August 2024). If you want to learn more about my consulting work, reach out for a conversation at eric@erichudson.co or take a look at my website. I’d love to hear about what you’re working on!

Online Workshops. I’m thrilled to continue my partnership with the California Teacher Development Collaborative (CATDC) with two more online workshops. Join us on March 18 for “Making Sense of AI,” an introduction to AI for educators, and on April 17 for “Leveling Up Our AI Practice,” a workshop for educators with AI experience who are looking to build new skills. Both workshops are open to all, whether or not you live/work in California.

Conferences. I will be facilitating workshops at the Summits for Transformative Learning in Atlanta, GA, USA, March 11-12 (STLinATL) and in St. Louis, MO, USA, May 30-31 (STLinSTL). I’m also a proud member of the board of the Association of Technology Leaders in Independent Schools (ATLIS) and will be attending their annual conference in Reno, NV, USA, April 7-10.

Links!

If you want to dive more deeply into Perplexity, use

’s detailed review of its features to guide your exploration of it.A terrific LinkedIn post from Tricia Friedman on what an AI-generated “selfie” taught her about the power of synthetic media.

Google says it has developed a bot that can do Olympiad-level geometry.

- has done us all a huge favor: he’s reviewed more than 100 AI syllabus statements, curated the best ones, and explained what he learned from them.

Lee Ann Jung with simple and meaningful ways to change the tone, language, and design of rubrics to be more student-friendly and less deficit-focused.

Maha Bali offers helpful advice and, as always, dozens of helpful linked resources, on how we should approach teaching and learning one year after the debut of ChatGPT.

Appreciate this review!--especially for the tools at the end that are more purpose -built for research.

A word of warning: Perplexity and Copilot both will frequently give citations for sites that have nothing to do with the claims that they have made. I have had Perplexity and Copilot both hallucinate/fabricate and then give references.

This is so helpful--thank you for offering it! I haven't used ChatPDF or Sci Space yet so I was especially interested in your experience with those. I would love to know what your assessment of the accuracy of the summaries is. I haven't tested extensively recently, but I've been warning students about inaccurate summaries and have seen examples in Elicit where the one-sentence summary did not capture the main finding of the paper but focused on background information.