What Schools are Asking about AI, Part 3

An update on what I'm seeing and hearing at schools

Every few months, I write a post with updates from my travels, sharing some of the questions and answers from the schools and learning organizations I work with. If you want to look back, you can find part one here and part two here.

Why should I be forced to compromise my beliefs and adopt a tool that I think is too ethically compromised and harmful for use at school?

This question came up in the last “What Schools are Asking About AI” post, but I want to raise it again because it has become a more frequent and more powerful element of my visits to schools. I’ve met groups of educators who organize themselves around “intentional AI resistance” or “conscientious objection” or being “New Luddites.”

What you believe matters, and you should be able to make decisions based on your beliefs. However, when you work in a school, engagement with the world is not something you can opt out of. Marc Watkins writes about this far more eloquently than I could in “Engaging with AI is not Adopting AI.”

How do I keep up with all the AI discourse and new tools?

The only answer I can offer here is my own approach. First, generative AI is not the topic in education I care most about. I’ve become so invested in it, however, because it is having an impact on topics that I have long cared about: learner-centered pedagogy and assessment, student agency, equity, responsive instructional design, and school’s relationship to the world beyond it. So, I follow people who think deeply about those areas (Maha Bali, Dan Meyer, Anna Mills, Leon Furze, and others). I pay attention to the people they follow and link to. I also follow people whose content pushes the edge of my technical understanding (on a good day, I understand about 40% of what Mitko Vasilev writes, but I’m getting better!). I follow people who deliver succinct summaries of what’s happening in the AI industry (Clare Zau and Charlie Guo).

I do not read everything these people publish. That would be impossible. I do not read AI books unless they are written by people with deep experience who offer durable, longitudinal perspectives (Fei Fei Li’s The Worlds I See is a good example). I do not sign up for or try every single AI tool that comes my way; instead, I pay close attention to (and ask for) recommendations from people in schools who are finding success.

What would you be doing with AI if you were still in the classroom?

If you’ve been following this newsletter for a while, you know that I was a classroom teacher for 12 years, mostly middle and high school English. If I were teaching right now, I would be doing three things.

Talking about and using AI with my students. I would bring AI out of the shadows it has been living in at schools since ChatGPT came out. The most important generative AI skill people can develop right now is critical literacy. Over the course of this year, I would be setting up discussions and debates about AI’s role in school and the world. I would be dedicating some class time to demonstrating a variety of chatbots and AI tools, asking bots to respond to my essay prompts, to revise writing, to help me prepare for quizzes and tests, to brainstorm ideas, etc.. I would ask my students what they thought and co-construct AI guidelines with them.

Building a prompt library for my students. I have been inspired by educators like Annie Fensie who are not just talking to students about AI, but providing them prompts and tips that guide them towards productive, process-oriented use of AI as an assistive technology. I would want to make it easier for my students to do the right thing when it came to using AI for their schoolwork, and a prompt library could help.

Asking students to show their learning in a wide variety of ways. To ensure students practiced thinking for themselves, I would move a lot of the process work I used to assign as homework into class: brainstorming ideas, curating and analyzing evidence, drafting, and revision. At least one of my writing assignments would ask students to be “AI assistants” where they tried to get AI to write something good, not unlike what I tried in my last post. I would also do analog, supervised, in-class writing. I would spend more time devising creative, “AI-resistant” writing prompts that demand personal perspectives and/or divergent thinking. I would be doing more oral defenses of written work than I used to.

In other words, I would want my students to get a lot of looks at the different ways we think and write, with and without AI.

What is a school that is a model of AI success?

I get some version of this question at almost every school I go to. I’m not sure there’s one definition of “AI success,” much less one school that has achieved it (although, if you work at that school, I would love to hear from you!). Generative AI is a very complex tool, and its impact is also complex and not fully understood. We need to let go of the idea that we can “solve” the AI challenge any time soon.

When asked about this, I encourage schools to look for successful individuals over successful institutions. The most interesting and useful AI work I’m seeing is being done by individual educators and students. I also encourage schools to set their own standards for success, and to make the goals meaningful and manageable. Where do you want to be with AI in one year? Three years? What is one thing you can decide or create right now, no matter how small, that will help your community feel a sense of success?

I am concerned about the privacy of my data and work and that of my students. Should I still use AI and ask them to do so?

Everyone should practice basic data hygiene when using any publicly available, cloud-based technology, including AI chatbots. Don’t share personal or private information. If you upload files or other resources, scrub them of any identifying information (replace names with numbers, for example, or delete email addresses). Remember that children under 18 are restricted from using certain AI tools.

Unless the terms of use for the tool specifically say otherwise, you should assume that your inputs are being used to train the models. Some bots have a feature in their settings where you can turn this off (ChatGPT and Perplexity both offer this, for example). I meet many teachers who do not want to upload their lesson plans, materials, or rubrics into AI for this reason. They consider these things to be their intellectual property. That’s a valid choice, as is my choice to upload my work into AI because it helps me do my job. I would ask that teachers treat their students’ work with the same care. You should be familiar with how AI bots, plagiarism detectors, and other tools use the student content you upload to it.

Finally, if your school has purchased AI tools or platforms, check with your technology team to learn more about what privacy and data protections those tools offer.

What worries you?

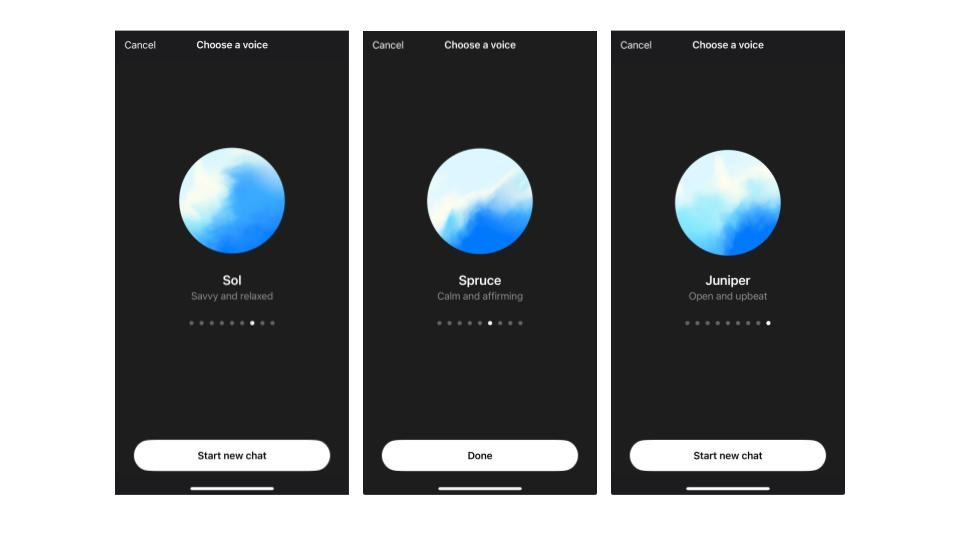

The other day, I was testing out ChatGPT’s Advanced Voice Mode feature. I opened the ChatGPT app on my phone, chose a “persona” voice to talk to, laid down on my couch, and just starting talking about my week: a few wins, a session I facilitated poorly, my to-do list, my dog’s mystifying new habit of sitting at my feet and glaring at me for no reason, etc.

In terms of realism, it impressed me. It responded quickly and in a human, podcast-quality voice. It expressed what could be interpreted as a sympathetic reaction, and it asked follow-up questions. At no point did I feel the same satisfaction or engagement I feel when I speak to another human, but I still found myself extending the conversation. We talked for about 10 minutes before I realized how much time had passed.

I closed the app and wondered, why did I keep talking to this bot when I wasn’t finding it to be a particularly helpful or engaging conversationalist? I landed on two reasons: 1) the novelty of having a realistic conversation with AI and 2) speaking freely with something that had no feelings about or personal stake in what I said. I spoke without fear of the bot’s reaction. The motivator was not the quality; it was the privacy.

This is all to say, I am worried that people will be drawn to AI because it renders vulnerability unnecessary.

What makes you hopeful?

Earlier this week, I presented to the students of Harpeth Hall School in Nashville, TN, USA. I was introduced by two students who got up on stage and told a story about how they asked two teachers for help crafting their introduction. They then played a recording of the teachers introducing me. At the end, they revealed that the entire thing had been generated by AI. The room filled with the sound of hundreds of people gasping and laughing.

This is the kind of thing that’s giving me hope. I am seeing more open, playful, and thoughtful exploration of generative AI, exploration that moves AI from a place of fear to a place of discussion and study. I am seeing teachers ask students about AI, and vice versa. I’m seeing schools celebrate students who are learning about AI through research, debate, or community engagement. I’m seeing teachers share their AI experiments with each other in peer-based professional learning. It is still so early. We have so much to learn.

Upcoming Ways to Connect with Me

Speaking, Facilitation, and Consultation

If you want to learn more about my work with schools and nonprofits, reach out for a conversation at eric@erichudson.co or take a look at my website. I’d love to hear about what you’re working on.

In-Person Events

November 20: I’ll be co-facilitating “Educational Leadership in the Age of AI” with Christina Lewellen and Shandor Simon at the New England Association of Schools and Colleges (NEASC) annual conference in Boston, MA, USA.

February 24: I’ll be at the National Business Officers Association Annual (NBOA) Meeting. I’m co-facilitating a session with David Boxer and Stacie Muñoz, “Crafting Equitable AI Guidelines for Your School.”

February 27-28: I’m facilitating a Signature Experience at the National Association of Independent Schools (NAIS) Annual Conference, “Four Priorities for Human-Centered AI in Schools.” This is a smaller, two-day program for those who want to dive more deeply into AI as part of the larger conference.

Online Workshops

November 21 and December 11: I’m part of PAIS’s “Pedagogical Prowess” series, and I’m offering a two-part workshop on “Designing and Facilitating Assessments that Support Student Agency.”

Links!

One of my favorite thinkers and writers, Tressie McMillan Cottom, has a great “mini-lecture” on AI and power (you should also read her book Lower Ed).

Another of my favorite thinkers and writers, Audrey Watters, is returning to the edtech beat. She just wrote about “Just So AI Stories” in her newsletter (you should also read her book Teaching Machines).

You may have read a story in about a young man in Florida who died by suicide after a months-long relationship with a bot on CharacterAI. The article and the accompanying interviews with journalists covering the story in this episode of “Hard Fork” offer important information about how bot companions work and how unregulated the technology is.

Alaska’s Education Commissioner used generative AI to write a proposed smartphone policy for the state’s schools. How do we know that? Because the citations in the published document were fabricated by AI, and neither she nor her team bothered to verify them.

“I took a ‘decision holiday’ and put AI in charge of my life.”

Google has released a promising AI watermarking technology that might help us more easily recognize AI-generated content.

If AI could generate a case study of all the challenges (AI-related and otherwise) in high-achieving schools right now, it might be this real-life case of parents suing a school for lowering their son’s grade because he used generative AI to help him do research.

Great post, Eric. Excellent, thoughtful questions, and I appreciate your transparency and authenticity. See you at NAIS :).

Hey Eric, big fan. With respect to what you would be doing in schools, I am curious what you think about "grading the chats." The story of my experiments with this approach is below - would love to hear your feedback!

https://mikekentz.substack.com/p/a-new-assessment-design-framework